Key Points

- Clearly define the problem associated with the management of the asset - what is happening now and what do do you want to happen?

- Think about second and third order data and how this relates to the problem

- Combine system and domain knowledge with data science expertise to plan a solution. Consider combining some of the approaches outlined below.

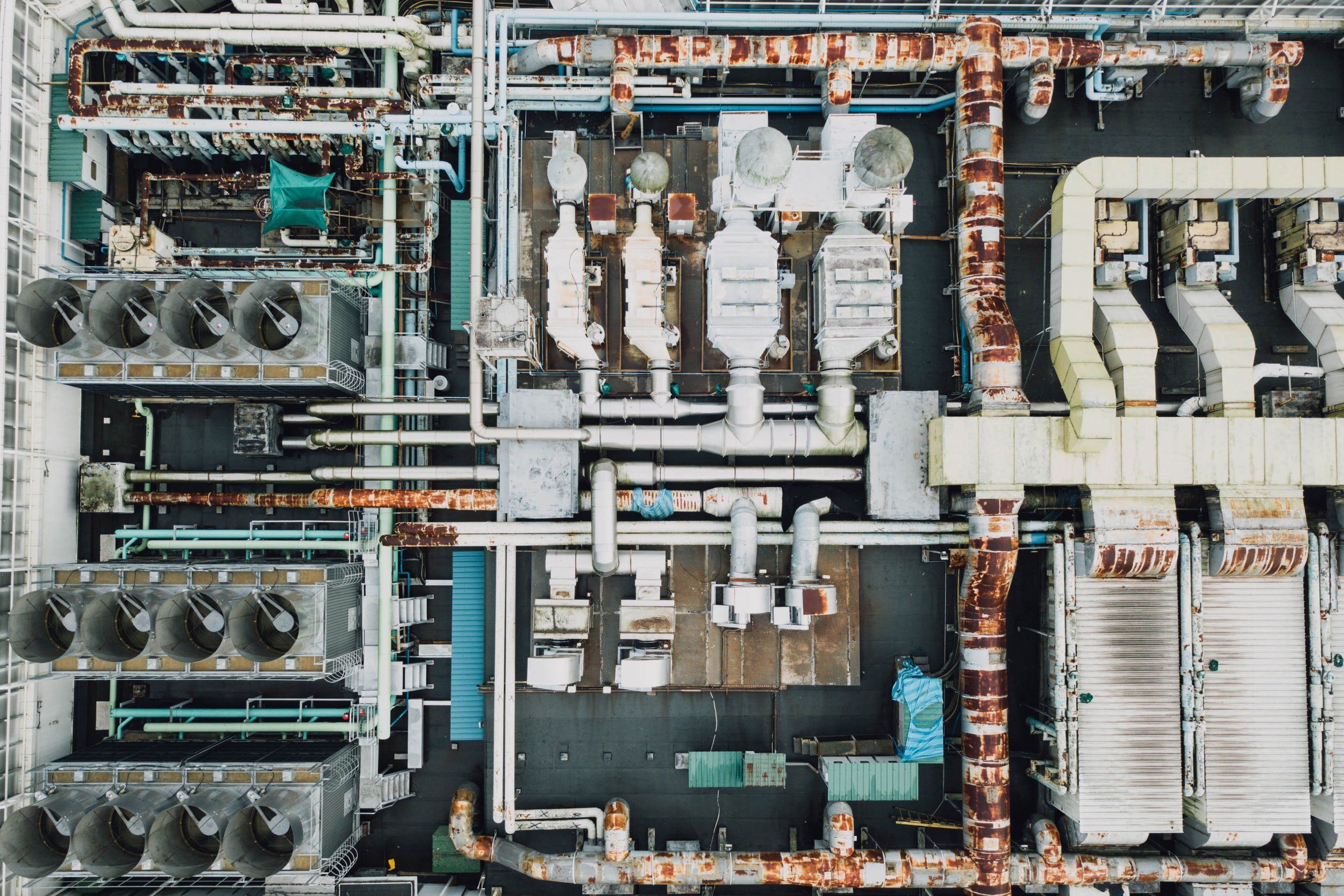

We are often asked how data science, ML and AI can be applied to industrial systems that have been in operation for decades and aren’t the obvious candidates for innovation or the application of cutting edge technology. Customers can be concerned that older systems lack the data infrastructure to generate any useful insight to drive intervention and that established, traditional processes are blockers to change. While it is true that in some cases the lack of data and sensor infrastructure on legacy assets will prohibit innovation, often the limitations can be overcome by combining different data science approaches and thinking creatively about the specific problem in question. The huge benefit that legacy assets have over newer systems is the potential access to large amounts of operational data which opens up the opportunity for powerful machine learning applications which deliver significant operational benefit.

In this post we will discuss the importance of how having a broad understanding of different data science techniques, coupled with domain knowledge and a creative mindset can result in generating powerful insights from legacy assets.

Don’t let age put you off

It is important to recognise the difference between a legacy asset and its associated data architecture. It can often be the case that despite the asset being old, the asset’s sensors are relatively new and are well integrated with the organisation’s data historian. An old machine doesn’t always mean no data. Arguably, a legacy asset fitted with a modern sensor system presents the best opportunity for data science applications – a large data set with lots of operational history. Where legacy assets don’t have a good data architecture it is important to consider second and third order data sources – those that aren’t directly associated with the operational elements of the asset but can be measured or acquired. It is also vital that the problem or opportunity associated with the asset is clearly defined and the problem is well bounded to the system. For example, is there opportunity to increase safety, reduce maintenance or reduce emissions? What pain is this problem causing the business? Our post on digital twins explores problem framing in more detail. Here, we look at how different data science approaches can be used to extract value from legacy assets with limited data architecture.

Legacy assets with limited data architecture

Some legacy assets will continue to operate or will require some form of monitoring and preservation without a sophisticated sensor network. They may be located in dangerous or inaccessible locations, or simply haven’t been updated since installation. The operational landscape may be changing such that they require attention to improve an aspect of their performance, but opportunities for data-driven solutions are seemingly limited given the perceived lack of data. Abstracting the problem to second and third order data sets in combination with the application of machine learning can generate powerful insights to drive decision making. This approach, in combination with making the most of what first order data is available (if any), opens up the potential for sophisticated data-driven plant condition monitoring and performance optimisation.

The following approaches can be used in combination to drive intervention in legacy assets with a limited data architecture.

Use of proxy indicators

Careful consideration of the relationship between first and second order parameters may present opportunity to extract useful data from an accessible source to abstract insight from the area of interest. Understanding what is sufficient to address the core problem is crucial. For example an operator may be interested in looking at bearing health but vibration monitoring is not possible. Looking at factors like oil temperatures, metal temperatures and noise as a proxy may be sufficient. A perfect answer may not always be possible or even required, but ‘imperfect’ data can still deliver the insights needed to guide decision-making.

Use of external environmental monitoring

It may be possible to install an external sensor network comprising acoustic monitoring, thermal monitoring and others (e.g humidity) to understand plant behaviour. Combing the data from these sensors with signal processing and anomaly detection technology can enable operators to monitor plant health, detect adverse behaviour and pinpoint faults. Our post on acoustic monitoring explores this in more detail.

Text mining

Some legacy assets may have limited instrumentation but are routinely maintained and inspected. This often results in a comprehensive maintenance log albeit one that can be inconsistent and irregular. Nevertheless, there is opportunity to extract useful information from the unstructured text data using natural language processing to support decision making around maintenance and plant performance. We explore natural language processing technology in more detail here.

Soft sensor technology

Soft sensors are an advancement of proxy indicators in that they combine several second order measurements to infer a first order value. This is then used to make decisions around performance and plant health. An example of this is presented in our case study on steam leak detection where steam leak rate from a turbine was calculated using a combination of pressure, temperature and valve position. Soft sensors can incorporate a number of machine learning approaches and are often fundamental in digital twin architecture. More detail on soft sensors can be found in our blog here.

Plant walkdowns

Setting parameters to measure on plant walkdowns can be an effective way of capturing performance data in the absence of plant instrumentation. Although they may lack digital connectivity, many legacy assets still have local instrumentation which can be used to monitor health and performance. Capturing data from analogue dials is common practice but the depth and sophistication of the subsequent analysis is hugely variable. Plant walkdown data can be used as important contextual labels for more sophisticated ML analysis.

Physics models

Plant behaviour can be modelled based on an understanding of fundamental operating principles and physical laws. These models can be combined with broader contextual data, derived from some of the approaches mentioned above, as an input into machine learning models to predict a variety of outcomes into plant health or operational behaviour.

This is not an exhaustive list and is intended to promote wider thinking around the use of second and third order data to support decision making. Combining some of the approaches listed above with a clear understating of the problem, a creative mindset and expert system knowledge can lead to powerful insights, even when direct plant data is scarce. For more information, or if you have any specific problems you’d like to discuss, please get in touch.