Key Points

- Audio data can contain a lot of information indicative of system performance, the unstructured nature of this data type lends it’s self best to deep learning approaches

- Acoustic emissions can be modelled to detect irregular system performance or detect specific failure mechanisms such as leaks in waste water pipes or symptoms of poor motor health

Industrial Application – Water sector

Water companies widely use acoustic loggers to inspect waste-water infrastructure for potential leaks. The logged signals are processed to obtain a small number of features which are used within a rule-based system to decide if a manual inspection is required. The result of which will decide if a leak repair job is scheduled.

Currently 60% of manual inspections result in identifying a leak. An ongoing challenge statement is aimed at increasing the accuracy of manual inspections by using the limited features extracted from the acoustic logger in combination with some other geological features and contextual information on the asset of interest.

Ada Mode have experimented with predicting the inspection outcome based on the provided data with limited success. It is expected that the outcome of this modelling approach is restricted by the experiment design and by limited features extracted from the acoustic logger.

Here we present methods of using unstructured audio data more directly for detection of leaks and other faults such as adverse motor operation.

Audio Classification – Faulty Motor Detection

When a specific class or symptom needs to be detected from audio, such as in the leak detection problem, classification methods can be used.

Here we explore a data-set provided by Ford motor company for an IEEE WCCI competition in 2008. The data contains 3601 samples of engine audio signals with the goal of detecting the presence of some undesired symptom. Each signal is of an equivalent length of 500 time units and a constant sampling frequency.

Deep learning techniques can be used to handle these unstructured data types directly without the need for specific feature extractors as used in the acoustic leak detection.

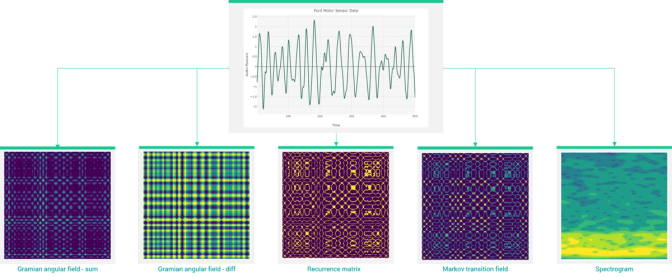

In audio classification it is common to embed the original signal into an image such as a spectrogram. Figure 1 shows some different options for embedding timeseries into images. This is done to express a wider variety of features of the audio clip than the raw amplitude over time and enable the use of powerful techniques developed for computer vision. For large audio files we can divide each audio signal into manageable chunks, and apply a voting system at prediction time to get the overall label of the whole audio clip.

For this problem we applied a recurrence plot embedding to represent each signal as an image, as the signals are only short and have been down sampled there is no need to split each audio chunk. After applying the embedding to each signal, we can train image classification techniques such as convolutional neural networks to detect the faulty motors.

The final model was able to match the original competition winner’s performance of ~90% accuracy without the requirement for extensive feature extraction techniques.

In deployment, this model can be used to identify faulty motors using new audio samples. The modelling pipeline will construct the image representation(s) of the audio and scan the image(s) for features indicative of faulty motor behaviour and direct necessary maintenance activity accurately and automatically.

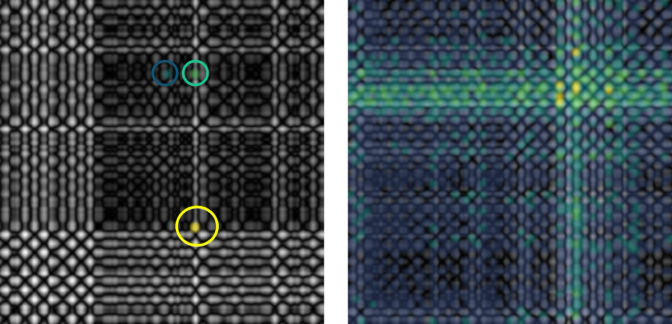

We can use a technique known as the grad-cam to identify regions of the image that the classifying model is using to make decisions.

The grad-cam outputs in figure 2 show the model can identify a specific local signature in the faulty class which is heavily influencing the prediction output. For a successful model to be built without deep learning the exact nature of this specific feature would have to be known about and extracted manually from each audio sample. Whereas here the neural network has successfully identified it for us. It is expected that the presence of a leak in the Wessex water data could be identified similarly.

Final Thoughts

At Ada Mode we believe acoustic data can be used more widely across industry for detecting and preventing failures. This is due to the rich amount of information contained in the data type that goes beyond that obtained from more standard instrumentation.

Water companies are making great strides to benefit from the data type by deploying up to 800 acoustic loggers a day resulting in the detection and maintenance of 2800 leaks to date, representing a 60% success rate. Attempts to increase this success rate have involved combining the handful of features taken from the acoustic sensors with geological and spatial data to refine the scheduling of manual inspections. Ada Mode suggest taking this a step further and allow for the use of the raw acoustic signals to be used as input to a deep learning model that specialises in handling unstructured data such as this. This would allow for the development of a far richer feature set for model development and have a great chance of successfully improving on the current 60% success rate.